Research Interests

We sense human by combining several sources of information (Brain and physiological signals, facial expressions, eye-tracking…)

We apply machine learning, deep learning and hypothesis testing

We interpret signals to asses people’s emotional states and social behaviors (but not only !)

We aim at measuring people in their ecological environment

We enhance interaction

We love games, entertainment and want to promote fun

Projects

Multimodal and emotional gaming smartband

The goal of this project is to integrate gaming contextual information (e.game type, game events, etc.) with physiological reactions of gamers to assess their emotions. This will be achieved by applying deep learning methods for semi-supervised emotion recognition and physiological signals denoising.

AI-powered wound care monitoring and assessment

In this project, we propose AI-based and serious-game based solutions to provide guidance and training to HCPs to document precisely the characteristics, treatment and evolution of wounds.

Emotions for game streaming

With this project the partners will develop a new generation of emotion-enabled peripherals. It will deliver the first-of-its-kind real-time emotional monitoring system for game streaming, allowing for automatically augmenting exciting moments in live broadcasts or editing a digest video with such highlights.

Impressions

The goal of this project is to build an anthropomorphic virtual character (ECA - Embodied Conversational Agent) able to make the best possible first impression on a user, thus effectively engaging him or her in an interaction.

Emotional and aesthetic highlights detection in movies

In this project, we test the degree of emotional participation of the audience in film watching. How do viewers react to the sequences in a given movie that film critics and filmmakers consider as aesthetically successful and therefore emotionally relevant?

EATMINT

The project “Affective computing and emotion awareness in computer-mediated interaction” aims at developing emotion awareness tools (EAT) to improve the collaborative processes and outcomes of people working together through computers.

Resources

The AMuCS database contains multi-modal and multi-user recordings of physiological signals and behaviors in an e-sport setting where participants played a 1v1 or 2v2 first person shooter game. The following signals were recorded for 72 sessions (255 participants) :

- Physiological signals: electrocardiogram, electrodermal activity, respiration;

- Behaviors: eye gaze and pupil size (eye-tracking), facial expressions, depth video, seat posture (pressure mat), software actions logs;

- Game: gameplay video, game event logs.

- Annotations: continuous self-annotated Arousal or Valence

The data was collected in an ecological setting at 4 LAN events in Switzerland.

This is a collection of LSL modules which we developed/adapted and used for the AMuCS database. It includes LSL modules for the following:

- Bitalino physiological signals

- SensingTex seat pressure mat

- Intel RealSense D435 stereo depth camera

- Tobii pro eye-tracker

- keyboard and mouse events

- CounterStrike: Global Offensive LSL extension and LSL plugin (source mod)

- A simple signal visualizer

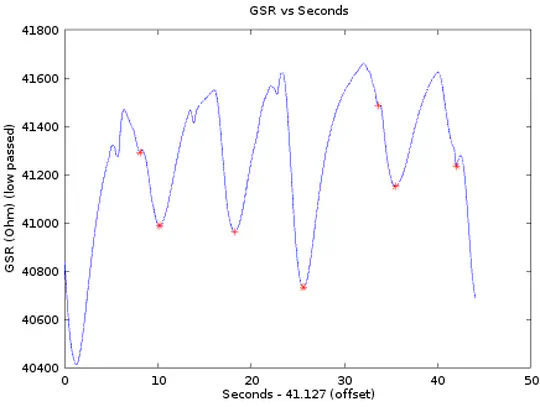

The EATMINT database contains multi-modal and multi-user recordings of affect and social behaviors in a collaborative setting. The following signals were recorded for 30 dyads (i.e. 60 participants) :

- Physiological signals: electrocardiogram, electrodermal activity (GSR), blood volume pulse, respiration and skin temperature;

- Behaviors: eye-movements (eye-tracking), facial expressions, software actions logs;

- Discourse: speech signals and transcripts.

Each interaction is annotated in term of affect, collaboration and social aspects (e.g. conflict, grounding, etc.)

TEAPhysio, the Toolbox for Emotion Analysis using Physiological signals, is a Matlab (fully Octave compliant) toolbox that aims to reduce code dispersing and duplication across your research projects.

TEAP supports various signals (ECG, BVP, GSR, EEG, etc.) and it’s aim is to allow the user to quickly use the and compute signal features without any boilerplate code. TEAP is also programmed in an OOP sort of way: it is really easy to program signal feature code or to add another signal structure to the toolbox.

GamEMO is an automatic emotion assessment installation used for game’s dynamic difficulty adjustment. The goal of the system is to maintain the player of the game in a state of entertainment and engagement where his/her skills match the difficulty level of the game. The player’s physiological signals are recorded while playing a Tetris game and signal processing, feature extraction and classification techniques are applied to the signals in order to detect when the player is anxious or bored. The level of the Tetris game is then adjusted according to the player’s detected emotional state.

News and media

Contact

- guillaume.chanel@unige.chg

- +41 22 379 01 83

- route de Drize 7 - Battelle Bat. A, Carouge, GE 1227

- Follow the detailed information here, then enter Building A (CUI) and take the stairs to the first floor. Ring at the door on your left.

- Follow us on twitter